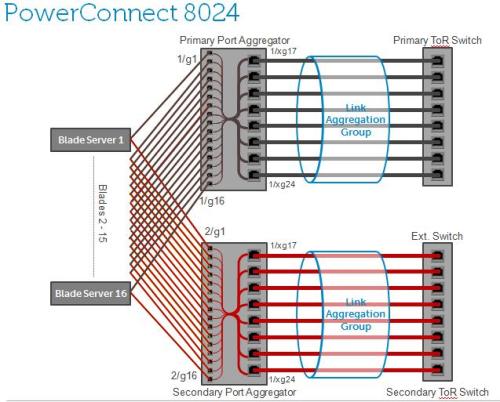

How: By enabling “Simple” mode in Dell PowerConnect Blade Switches, the administrator can simply map internal ports to external ports for traffic aggregation, with feature called “Port Aggregator”.

– By default, PowerConnect Switches are in “Normal” mode

– New series of SimpleConnect LAN IO Modules exclusively work in Simple mode.

Providing ability to aggregate traffic from multiple downlinks to a single or fewer uplinks and provide port consolidation at the edge of Blade Chassis.

The Port Aggregator feature minimizes the administration required for managing the Blade-based switches.

Benefits:

- Ease of deployment/management for I/O Modules for Blade Server connectivity –Is simple to configure; just map the internal ports to the external ports, assign a VLAN to the group (if required). This feature automatically configures multiple external ports into an LACP trunk group.

- Reduce involvement of network admin in blade deployments –Eliminates the need to understand STP, VLANs, & LACP groups.

- SimpleConnect is completely interoperable. Full integration of PowerConnect blade switches with 3rd party I/O H/W (Cisco, etc.)

- Provides cable aggregation benefit offered by integrated blade switches.

- SimpleConnect feature provides loop-free operation without using STP.

- It works across a stack of switches (M6220 and M6348) so that you can now manage switches as one via the easy-to-use interface.

Check out this technology overview video series on Simple Connect LAN modules for M1000e Blade Chassis.

Video1: Shows how simple it is, to deploy blade servers with SimpleConnect, into existing DataCenter LANs.

Video2: This one shows how to deploy blade servers with SimpleConnect, into existing VLANs in customer DataCenters.

Video3: Shows you how easy it is, to manage Blade Server traffic flows with SimpleConnect connecting them to your existing LANs or VLANs in your Data Center.

Technique behind it:

– The downlink and uplink ports are grouped with a reserved VLAN, so that traffic can only be flooded within that group, and cannot go across.

– Uplink ports are enabled as members of LAGs (ie: form a Link Aggregation Control Protocol (LACP) trunk group) to connect to uplink switches.

– Enabling multiple downlink traffic aggregation to fewer uplinks with load balancing capabilities.

Configuring Simple Mode when connecting to Cisco switches

- Boot the M6220/M6348/M8024 switch with the default configuration.

- Configure four ports (port 1 to port 4) to be a member of a dynamic LAG on the Cisco switch.

- Connect a link from port 1 on the Cisco switch to any 1Gig external port on the M6220/M6348/M8024.

- Send traffic from both sides. Traffic is switched.

- Add another link from port 2 on the Cisco switch to any 1Gig external port present on an M6220/M6348/M8024 switch. The newly added link would automatically be added to the LAG that is being used by the Port Aggregator group and data would be load-balanced.

SimpleConnect provides flexibility to configure multiple port aggregation groups, to meet customer needs to group specific blade servers to manage traffic flows as needed.

Also this is designed to simply connect virtualized blade server avoiding complex configuration and management of standard switches.

Have any of you implemented this in your DataCenters today? Let me know…